Every SEO dreams about the day where they open the Pages Indexing Report in Google Search Console and see everything in green. Unfortunately that’s rarely the case.

It’s inevitable that as your site grows, this report will have a mix of pages in both Indexed and Not Indexed state. While you can use the Indexing API or improve your site structure and internal linking to index your pages faster, there’ll always be pages stuck in the Not Indexed state.

The reality is that there’s not much we can do, but let’s talk about it.

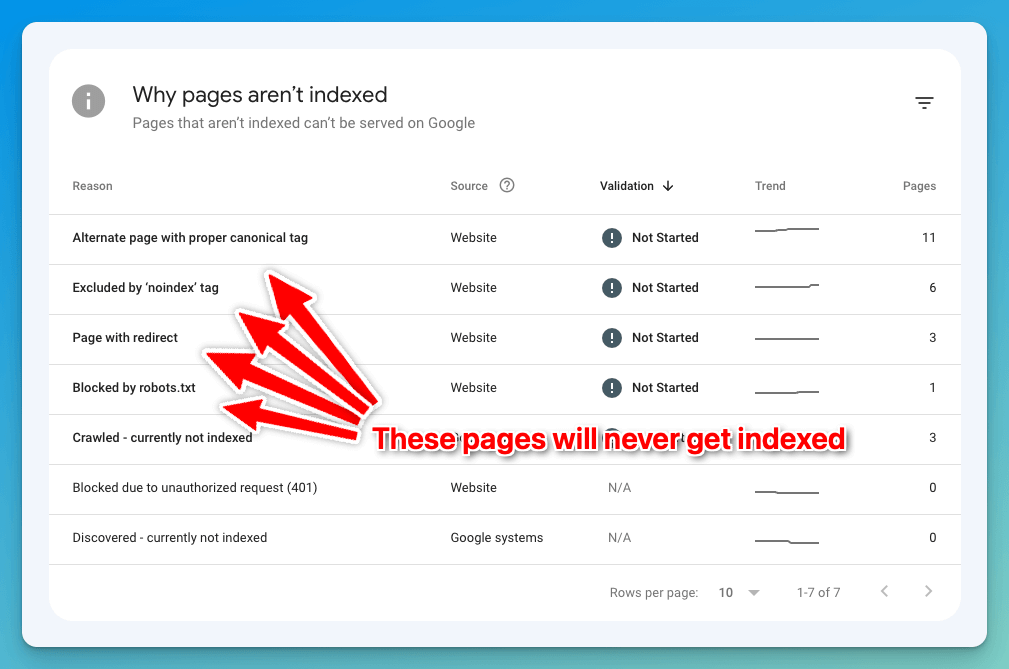

Why do I have pages that are not indexed?

There are many reasons why a page might not be indexed, some of them can actually be fixed, others are just out of our control. Imagine that you have created a blog post with the URL /blog/best-seo-tips-2023 and that pages was indexed by Google.

It’s now 2024 and you decide to refresh the content and update the URL to /blog/best-seo-tips-2024. You’ve also set up a redirect from the old URL to the new one, doing everything Google recommends when changing URLs.

So what happened to those URLs in Google Search Console?

The new URL will be indexed as a new page, it’ll get all the traffic and “SEO Juice” from the old URL, however the old URL will be forever stuck be in a Not Indexed state with the Page with redirect reason. The same will happen when you delete a page, the only difference is that the reason will be Not found (404).

That sucks doesn’t it?

How to clean up the Pages Indexing Report

As a website owner, there are some pages that we do not want to be indexed anymore. It may have been due to a previous mistake, a change in the URL, or simply because the page no longer exists.

Here’s where some people would say — Why don’t you exclude them using robots.txt or set the noindex meta tag?

I’m sorry but it’s not that simple and it won’t work. Those pages have already been previously crawled and indexed by Google, and they will remain in the report indefinitely under the Blocked by robots.txt or URL marked ‘noindex’ reasons.

The reality is simple and unfortunately a sad one: there’s nothing we can do to remove those pages from the report. This report serves as a historical record of all pages known to Google.

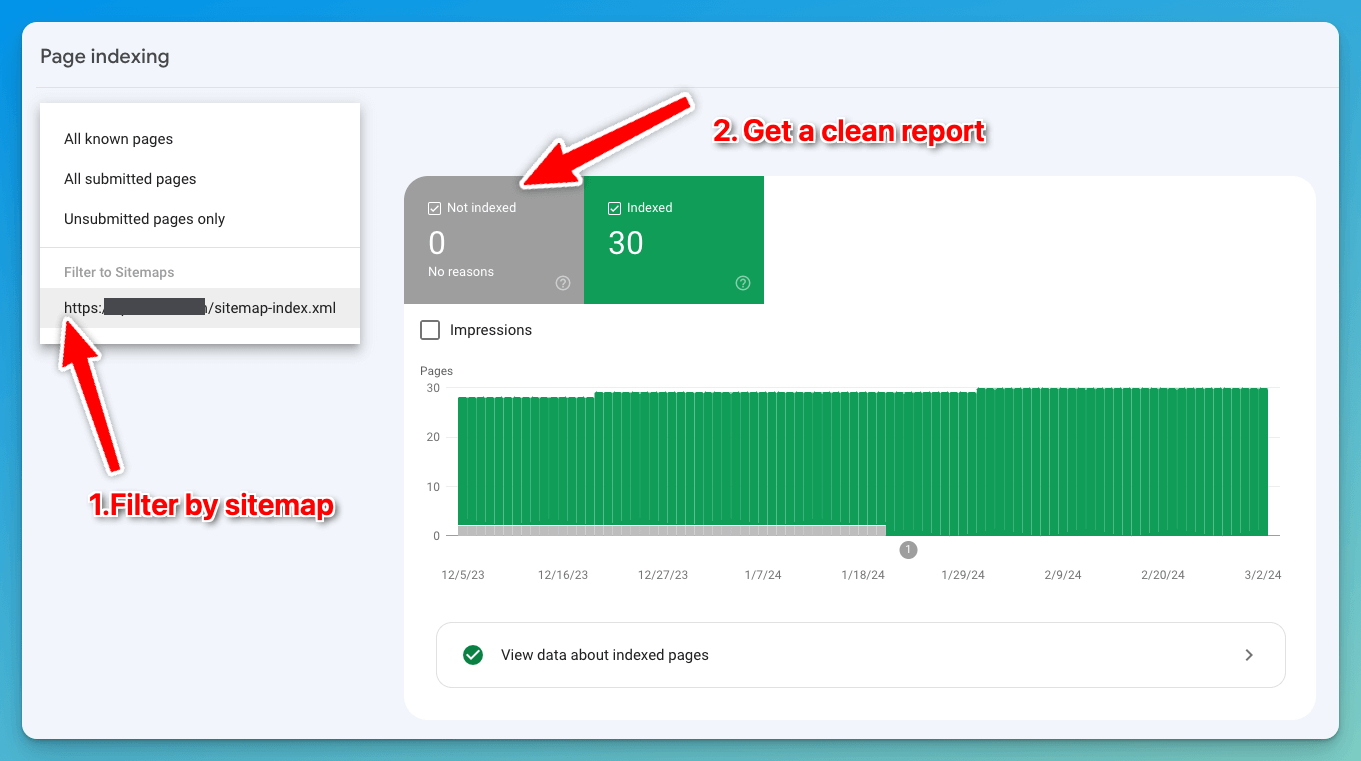

Solution: Use a sitemap

Did you know you can filter the report by sitemap? This is actually the only option to get a clean report.

If you have a sitemap that is up-to-date (which you should) with the pages you really want to be indexed, you can use this sitemap to filter the report.

By using this filter, I can see the indexing status of the pages I truly care about. Red flag goes up whenever I see a page in the sitemap but that’s not indexed, that’s when I know I have to take action and fix it.

What about the temporary removal tool?

I initially thought that the Temporary Removals tool within Google Search Console would be the solution to this problem, but it’s not.

As the name suggests, it’s a temporary removal, and it’s not meant to be used to remove pages from the report. It’s meant to be used when you want to remove a page from Google Search results for a short period of time.

After you submit the request, the page will be removed from Google Search results for some time, but it’ll still be in the Pages Indexing Report and eventually it’ll be back in the search results.

Conclusion

Sitemaps are extremely important for SEO, and this is just another reason why you should have one and keep it up-to-date.

If you end up finding a different solution to this problem, please let me know. I’d love to be able to actually remove those pages from the report. But until then, I’ll keep using the sitemap filter and so should you.

✨ Interested in simplifying your SEO analytics workflow? Try SEO Gets for free.